Spot Workshop > Spot Ocean for Spark Workshop > Ocean spark Workshop > Application Workflows > Running a custom application > Configuration templates

Configuration templates

To run any spark application we need to define some necessary configuration. so in ocean for spark we came up with a concept called configuration template. As its name indicates it is a template that contains all the cofigurations needed to run a spark application like this example

{

"type": "Python",

"sparkVersion": "3.2.1",

"image": "gcr.io/datamechanics/spark:platform-3.2-dm17",

"imagePullPolicy": "IfNotPresent",

"sparkConf": {

"spark.sql.adaptive.enabled": "true",

"spark.dynamicAllocation.enabled": "true",

"spark.cleaner.periodicGC.interval": "1min",

"spark.sql.execution.arrow.enabled": "true",

"spark.dynamicAllocation.maxExecutors": "6",

"spark.kubernetes.allocation.batch.size": "5",

"spark.dynamicAllocation.initialExecutors": "1",

"spark.sql.execution.arrow.pyspark.enabled": "true",

"spark.dynamicAllocation.executorAllocationRatio": "0.33",

"spark.dynamicAllocation.shuffleTracking.enabled": "true",

"spark.scheduler.maxRegisteredResourcesWaitingTime": "1000",

"spark.kubernetes.allocation.driver.readinessTimeout": "120s",

"spark.dynamicAllocation.sustainedSchedulerBacklogTimeout": "60"

},

"driver": {

"cores": 1,

"spot": false

},

"executor": {

"cores": 4,

"spot": true

}

}

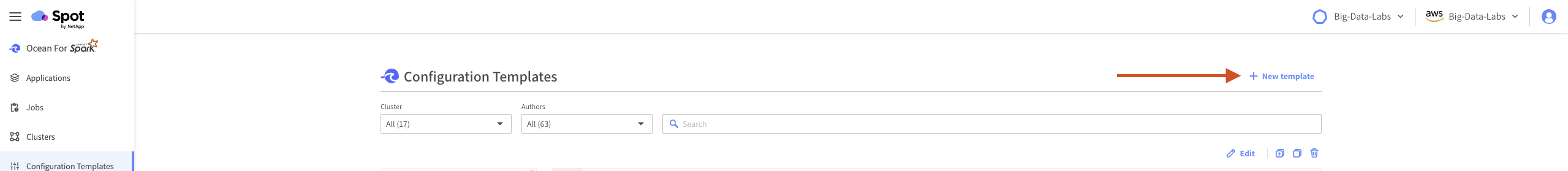

so to create this configuration template we need to go to the ocean for spark page in the spot console and chhose the configuration template tab on the left and click on New Template

and then add the configuration that we just saw here and let’s name it ocean-workshop. once this is done we are ready to run our code.

we will be running the code using 3 methods: